- What will be scraped

- Full Code

- Preparation

- Code Explanation

- Using Google Play Books Store API from SerpApi

- Links

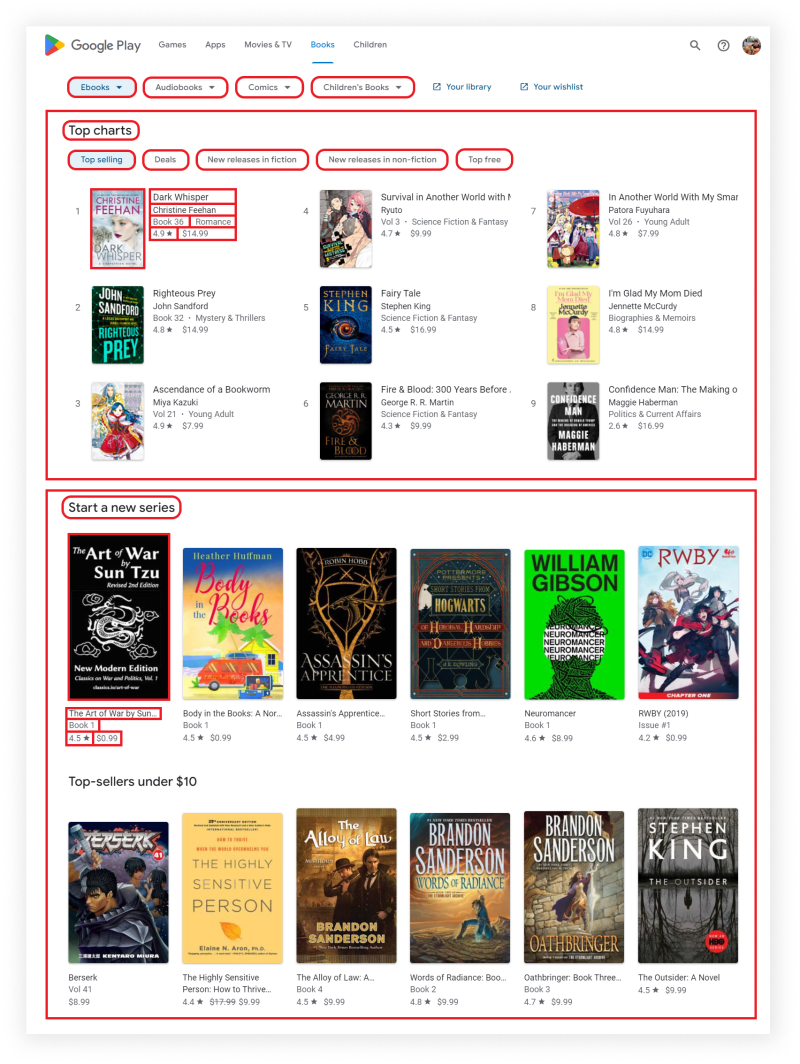

What will be scraped

📌Note: Google Play gives different results for logged in and not logged in users.

Full Code

If you don't need explanation, have a look at full code example in the online IDE.

import time, json

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.common.by import By

from parsel import Selector

google_play_books = []

def scroll_page(url):

service = Service(ChromeDriverManager().install())

options = webdriver.ChromeOptions()

options.add_argument("--headless")

options.add_argument("--lang=en")

options.add_argument("user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36")

options.add_argument("--no-sandbox")

driver = webdriver.Chrome(service=service, options=options)

driver.get(url)

old_height = driver.execute_script("""

function getHeight() {

return document.querySelector('.T4LgNb').scrollHeight;

}

return getHeight();

""")

while True:

driver.execute_script("window.scrollTo(0, document.querySelector('.T4LgNb').scrollHeight)")

time.sleep(1)

new_height = driver.execute_script("""

function getHeight() {

return document.querySelector('.T4LgNb').scrollHeight;

}

return getHeight();

""")

if new_height == old_height:

break

old_height = new_height

scrape_top_charts(driver=driver)

selector = Selector(driver.page_source)

driver.quit()

return selector

def scrape_top_charts(driver):

section = {

'title': 'Top charts',

'charts': {}

}

for chart in driver.find_elements(By.CSS_SELECTOR, '.b6SkTb .D3Qfie'):

driver.execute_script("arguments[0].click();", chart)

time.sleep(2)

selector = Selector(driver.page_source)

chart_title = chart.text

section['charts'][chart_title] = []

for book in selector.css('.itIJzb'):

title = book.css('.DdYX5::text').get()

link = 'https://play.google.com' + book.css('::attr(href)').get()

author = book.css('.wMUdtb::text').get()

rating = book.css('.ubGTjb div .w2kbF::text').get()

rating = float(rating) if rating else rating

thumbnail = book.css('.j2FCNc img::attr(srcset)').get().replace(' 2x', '')

extension = book.css('.ubGTjb:nth-child(3) .w2kbF:not(:contains(\$))::text').getall()

price = book.css('.ubGTjb:nth-child(3) .w2kbF:contains(\$)::text, .ubGTjb:nth-child(4) > .w2kbF::text').get()

extracted_price = float(price[1:]) if price not in ['Free', None] else price

section['charts'][chart_title].append({

'title': title,

'link': link,

'author': author,

'extension': extension,

'rating': rating,

'price': price,

'extracted_price': extracted_price,

'thumbnail': thumbnail,

})

google_play_books.append(section)

def scrape_all_sections(selector):

for result in selector.css('.Ubi8Z section'):

section = {}

section['title'] = result.css('.kcen6d span::text').get()

if section['title'] == 'Top charts':

continue

section['subtitle'] = result.css('.kMqehf span::text').get()

section['items'] = []

for book in result.css('.UVEnyf'):

title = book.css('.Epkrse::text').get()

link = 'https://play.google.com' + book.css('.Si6A0c::attr(href)').get()

extension = book.css('.VfSS8d::text').get()

rating = book.css('.LrNMN:nth-child(1)::text').get()

rating = float(rating) if rating else rating

price = book.css('.VixbEe span::text').get()

extracted_price = float(price[1:]) if price else price

thumbnail = book.css('.etjhNc::attr(srcset)').get()

thumbnail = thumbnail.replace(' 2x', '') if thumbnail else thumbnail

section['items'].append({

'title': title,

'link': link,

'extension': extension,

'rating': rating,

'price': price,

'extracted_price': extracted_price,

'thumbnail': thumbnail,

})

google_play_books.append(section)

print(json.dumps(google_play_books, indent=2, ensure_ascii=False))

def scrape_google_play_books(lang, country, category=None):

params = {

'hl': lang, # language

'gl': country, # country of the search

'category': category # defaults to Ebooks. List of all books categories: https://serpapi.com/google-play-books-categories

}

if params['category']:

URL = f"https://play.google.com/store/books/category/{params['category']}?hl={params['hl']}&gl={params['gl']}"

else:

URL = f"https://play.google.com/store/books?hl={params['hl']}&gl={params['gl']}"

result = scroll_page(URL)

scrape_all_sections(result)

if __name__ == "__main__":

scrape_google_play_books(lang='en_GB', country='US')

Preparation

Install libraries:

pip install parsel selenium webdriver webdriver_manager

Reduce the chance of being blocked

Make sure you're using request headers user-agent to act as a "real" user visit. Because default requests user-agent is python-requests and websites understand that it's most likely a script that sends a request. Check what's your user-agent.

There's a how to reduce the chance of being blocked while web scraping blog post that can get you familiar with basic and more advanced approaches.

Code Explanation

Import libraries:

import time, json

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

from webdriver_manager.chrome import ChromeDriverManager

from selenium.webdriver.common.by import By

from parsel import Selector

| Library | Purpose |

|---|---|

time |

to work with time in Python. |

json |

to convert extracted data to a JSON object. |

webdriver |

to drive a browser natively, as a user would, either locally or on a remote machine using the Selenium server. |

Service |

to manage the starting and stopping of the ChromeDriver. |

By |

to set of supported locator strategies (By.ID, By.TAG_NAME, By.XPATH etc). |

Selector |

XML/HTML parser that have full XPath and CSS selectors support. |

Define the list in which all the extracted data will be stored:

google_play_books = []

Top-level code environment

The function takes the lang, country and category parameters that are passed to the params dictionary to form the URL. You can pass other parameter values to the function and this will affect the output:

params = {

'hl': lang, # language

'gl': country, # country of the search

'category': category # defaults to Ebooks. List of all books categories: https://serpapi.com/google-play-books-categories

}

I want to draw your attention to the fact that by clicking on different categories, different links are formed. This is illustrated more clearly in the GIF below:

In order for the code to work correctly with each of the categories, it was decided to create a condition according to which the corresponding link will be formed:

if params['category']:

URL = f"https://play.google.com/store/books/category/{params['category']}?hl={params['hl']}&gl={params['gl']}"

else:

URL = f"https://play.google.com/store/books?hl={params['hl']}&gl={params['gl']}"

📌Note: If you do not pass a value to the category parameter, then by default a link will be generated leading to the Ebooks category. If you want to select another category, then you can see a list of all books categories of books and select the one you are interested in.

Next, the URL is passed to the scroll_page(URL) function to scroll the page and get all data. The result that this function returns is passed to the scrape_all_sections(result) function to extract the necessary data. The explanation of these functions will be in the corresponding headings below.

result = scroll_page(URL)

scrape_all_sections(result)

This code uses boilerplate __name__ == "__main__" construct that protects users from accidentally invoking the script when they didn't intend to. This indicates that the code is a runnable script:

def scrape_google_play_books(lang, country, category=None):

params = {

'hl': lang, # language

'gl': country, # country of the search

'category': category # defaults to Ebooks. List of all books categories: https://serpapi.com/google-play-books-categories

}

if params['category']:

URL = f"https://play.google.com/store/books/category/{params['category']}?hl={params['hl']}&gl={params['gl']}"

else:

URL = f"https://play.google.com/store/books?hl={params['hl']}&gl={params['gl']}"

result = scroll_page(URL)

scrape_all_sections(result)

if __name__ == "__main__":

scrape_google_play_books(lang='en_GB', country='US')

This check will only be performed if the user has run this file. If the user imports this file into another, then the check will not work.

You can watch the video Python Tutorial: if name == 'main' for more details.

Scroll page

The function takes the URL and returns a full HTML structure.

First, let's understand how pagination works on the Google Play Books page. Data does not load immediately. If the user needs more data, they will simply scroll the page and site download a small package of data.

In this case, selenium library is used, which allows you to simulate user actions in the browser. For selenium to work, you need to use ChromeDriver, which can be downloaded manually or using code. In our case, the second method is used. To control the start and stop of ChromeDriver, you need to use Service which will install browser binaries under the hood:

service = Service(ChromeDriverManager().install())

You should also add options to work correctly:

options = webdriver.ChromeOptions()

options.add_argument('--headless')

options.add_argument('--lang=en')

options.add_argument('user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36')

options.add_argument('--no-sandbox')

| Chrome options | Explanation |

|---|---|

--headless |

to run Chrome in headless mode. |

--lang=en |

to set the browser language to English. |

user-agent |

to act as a "real" user request from the browser by passing it to request headers. Check what's your user-agent. |

--no-sandbox |

to make chromedriver work properly on different machines. |

Now we can start webdriver and pass the URL to the get() method.

driver = webdriver.Chrome(service=service, options=options)

driver.get(url)

The page scrolling algorithm looks like this:

- Find out the initial page height and write the result to the

old_heightvariable. - Scroll the page using the script and wait for the data to load.

- Find out the new page height and write the result to the

new_heightvariable. - If the variables

new_heightandold_heightare equal, then we complete the algorithm, otherwise we write the value of the variablenew_heightto the variableold_heightand return to step 2.

Getting the page height and scroll is done by pasting the JavaScript code into the execute_script() method.

# 1 step

old_height = driver.execute_script("""

function getHeight() {

return document.querySelector('.T4LgNb').scrollHeight;

}

return getHeight();

""")

while True:

# 2 step

driver.execute_script("window.scrollTo(0, document.querySelector('.T4LgNb').scrollHeight)")

time.sleep(1)

# 3 step

new_height = driver.execute_script("""

function getHeight() {

return document.querySelector('.T4LgNb').scrollHeight;

}

return getHeight();

""")

# 4 step

if new_height == old_height:

break

old_height = new_height

After all the data has been loaded, you need to pass them to the scrape_top_charts function. This function will use the driver to simulate user actions (button presses) and will be described in the relevant section below. Therefore, it is important to extract the data before we stop the driver.

scrape_top_charts(driver=driver)

Now we need to process HTML using from Parsel package, in which we pass the HTML structure with all the data that was received after scrolling the page. This is necessary to successfully retrieve data in the next function. After all the operations are done, stop the driver:

selector = Selector(driver.page_source)

driver.quit()

The function looks like this:

def scroll_page(url):

service = Service(ChromeDriverManager().install())

options = webdriver.ChromeOptions()

options.add_argument("--headless")

options.add_argument("--lang=en")

options.add_argument("user-agent=Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/104.0.0.0 Safari/537.36")

options.add_argument("--no-sandbox")

driver = webdriver.Chrome(service=service, options=options)

driver.get(url)

old_height = driver.execute_script("""

function getHeight() {

return document.querySelector('.T4LgNb').scrollHeight;

}

return getHeight();

""")

while True:

driver.execute_script("window.scrollTo(0, document.querySelector('.T4LgNb').scrollHeight)")

time.sleep(1)

new_height = driver.execute_script("""

function getHeight() {

return document.querySelector('.T4LgNb').scrollHeight;

}

return getHeight();

""")

if new_height == old_height:

break

old_height = new_height

scrape_top_charts(driver=driver)

selector = Selector(driver.page_source)

driver.quit()

return selector

Scrape top charts

This function takes a the full HTML structure and appends the results to the google_play_books list.

The top charts section is not present in every category. Therefore, it is not possible to determine the correct structure for retrieving data in advance. Accordingly, it is necessary to define the structure of the section at the beginning of the function:

section = {

'title': 'Top charts',

'charts': {}

}

The top charts section contains additional charts. Each category has a different number of these additional charts. Therefore, it is impossible to make the initial structure.

On the GIF, I show how the top charts work:

To load data from all charts, you need to access each chart using the driver.find_elements() method and pass the .b6SkTb .D3Qfie selector there that is responsible for the buttons. In each iteration of the loop, the button responsible for the corresponding chart will be pressed. Also, you need to create the object to retrieve data and create a list where data will be added by the corresponding key:

for chart in driver.find_elements(By.CSS_SELECTOR, '.b6SkTb .D3Qfie'):

driver.execute_script("arguments[0].click();", chart)

time.sleep(2)

selector = Selector(driver.page_source)

chart_title = chart.text

section['charts'][chart_title] = []

To extract the necessary data, you need to find the selector where they are located. In our case, this is the .itIJzb selector, which contains all books by the specific chart. You need to iterate each book in the loop:

for book in selector.css('.itIJzb'):

# data extraction will be here

For each book, data such as title, link, author, extension, rating, price, extracted_price and thumbnail are easily extracted. You need to find the matching selector and get the text or attribute value. I want to additionally note that the thumbnail is retrieved from the srcset attribute, where it is of better quality:

title = book.css('.DdYX5::text').get()

link = 'https://play.google.com' + book.css('::attr(href)').get()

author = book.css('.wMUdtb::text').get()

rating = book.css('.ubGTjb div .w2kbF::text').get()

rating = float(rating) if rating else rating

thumbnail = book.css('.j2FCNc img::attr(srcset)').get().replace(' 2x', '')

extension = book.css('.ubGTjb:nth-child(3) .w2kbF:not(:contains(\$))::text').getall()

price = book.css('.ubGTjb:nth-child(3) .w2kbF:contains(\$)::text, .ubGTjb:nth-child(4) > .w2kbF::text').get()

extracted_price = float(price[1:]) if price not in ['Free', None] else price

📌Note: When extracting data such as rating and extracted_price, a ternary expression is used which handles the value of this data, if any.

After the data is retrieved, it is appended to the section['charts'][chart_title] list:

section['charts'][chart_title].append({

'title': title,

'link': link,

'author': author,

'extension': extension,

'rating': rating,

'price': price,

'extracted_price': extracted_price,

'thumbnail': thumbnail,

})

At the end of the function, the section with the extracted top charts is in turn appended to the google_play_books list:

google_play_books.append(section)

The complete function to scrape top charts would look like this:

def scrape_top_charts(driver):

section = {

'title': 'Top charts',

'charts': {}

}

for chart in driver.find_elements(By.CSS_SELECTOR, '.b6SkTb .D3Qfie'):

driver.execute_script("arguments[0].click();", chart)

time.sleep(2)

selector = Selector(driver.page_source)

chart_title = chart.text

section['charts'][chart_title] = []

for book in selector.css('.itIJzb'):

title = book.css('.DdYX5::text').get()

link = 'https://play.google.com' + book.css('::attr(href)').get()

author = book.css('.wMUdtb::text').get()

rating = book.css('.ubGTjb div .w2kbF::text').get()

rating = float(rating) if rating else rating

thumbnail = book.css('.j2FCNc img::attr(srcset)').get().replace(' 2x', '')

extension = book.css('.ubGTjb:nth-child(3) .w2kbF:not(:contains(\$))::text').getall()

price = book.css('.ubGTjb:nth-child(3) .w2kbF:contains(\$)::text, .ubGTjb:nth-child(4) > .w2kbF::text').get()

extracted_price = float(price[1:]) if price not in ['Free', None] else price

section['charts'][chart_title].append({

'title': title,

'link': link,

'author': author,

'extension': extension,

'rating': rating,

'price': price,

'extracted_price': extracted_price,

'thumbnail': thumbnail,

})

google_play_books.append(section)

| Code | Explanation |

|---|---|

css() |

to access elements by the passed selector. |

::text or ::attr(<attribute>) |

to extract textual or attribute data from the node. |

get() |

to actually extract the textual data. |

float() |

to make a floating number from a string value. |

replace() |

to replace all occurrences of the old substring with the new one without extra elements. |

Scrape all sections

This function takes a full HTML structure and prints all results in JSON format.

To retrieve data from all sections, you need to find the .Ubi8Z section selector of the sections. You need to iterate each section in the loop:

for result in selector.css('.Ubi8Z section'):

# data extraction will be here

The section dictionary structure consists of the keys title, subtitle and items. The values in these keys are retrieved for each section.

Pay attention to the value check in the title key. The condition is used to not extract data from this section, since they are extracted differently and were extracted earlier in the scrape_top_charts() function:

section = {}

section['title'] = result.css('.kcen6d span::text').get()

if section['title'] == 'Top charts':

continue

section['subtitle'] = result.css('.kMqehf span::text').get()

section['items'] = []

To extract the necessary data, you need to find the selector where they are located. In our case, this is the .UVEnyf selector, which contains all books. You need to iterate each book in the loop:

for book in result.css('.UVEnyf'):

# data extraction will be here

The difference in data extraction in this function is that there is no way to get author. Data is also retrieved by other selectors:

title = book.css('.Epkrse::text').get()

link = 'https://play.google.com' + book.css('.Si6A0c::attr(href)').get()

extension = book.css('.VfSS8d::text').get()

rating = book.css('.LrNMN:nth-child(1)::text').get()

rating = float(rating) if rating else rating

price = book.css('.VixbEe span::text').get()

extracted_price = float(price[1:]) if price else price

thumbnail = book.css('.etjhNc::attr(srcset)').get()

thumbnail = thumbnail.replace(' 2x', '') if thumbnail else thumbnail

📌Note: When extracting the rating, extracted_price and thumbnail a ternary expression is used which handles the values of these data, if any are available.

After the data is retrieved, it is appended to the section['items'] dictionary:

section['items'].append({

'title': title,

'link': link,

'extension': extension,

'rating': rating,

'price': price,

'extracted_price': extracted_price,

'thumbnail': thumbnail,

})

At the end of the function, the section dictionary with the received data from the current section is added to the google_play_books list:

google_play_books.append(section)

The complete function to scrape all sections would look like this:

def scrape_all_sections(selector):

for result in selector.css('.Ubi8Z section'):

section = {}

section['title'] = result.css('.kcen6d span::text').get()

if section['title'] == 'Top charts':

continue

section['subtitle'] = result.css('.kMqehf span::text').get()

section['items'] = []

for book in result.css('.UVEnyf'):

title = book.css('.Epkrse::text').get()

link = 'https://play.google.com' + book.css('.Si6A0c::attr(href)').get()

extension = book.css('.VfSS8d::text').get()

rating = book.css('.LrNMN:nth-child(1)::text').get()

rating = float(rating) if rating else rating

price = book.css('.VixbEe span::text').get()

extracted_price = float(price[1:]) if price else price

thumbnail = book.css('.etjhNc::attr(srcset)').get()

thumbnail = thumbnail.replace(' 2x', '') if thumbnail else thumbnail

section['items'].append({

'title': title,

'link': link,

'extension': extension,

'rating': rating,

'price': price,

'extracted_price': extracted_price,

'thumbnail': thumbnail,

})

google_play_books.append(section)

print(json.dumps(google_play_books, indent=2, ensure_ascii=False))

Output

Output for scrape_google_play_books(lang='en_GB', country='US') function:

[

{

"title": "Top charts",

"charts": {

"Top selling": [

{

"title": "Dark Whisper",

"link": "https://play.google.com/store/books/details/Christine_Feehan_Dark_Whisper?id=XDlcEAAAQBAJ",

"author": "Christine Feehan",

"extension": [

"Book 36",

"Romance"

],

"rating": 4.7,

"price": "$14.99",

"extracted_price": 14.99,

"thumbnail": "https://books.google.com/books/publisher/content/images/frontcover/XDlcEAAAQBAJ?fife=w176-h264"

},

... other books

],

... other charts

"Top free": [

{

"title": "Saving Sarah",

"link": "https://play.google.com/store/books/details/Kathy_Ivan_Saving_Sarah?id=uOV4EAAAQBAJ",

"author": "Kathy Ivan",

"extension": [

"Book 1",

"Romance"

],

"rating": 5.0,

"price": "Free",

"extracted_price": "Free",

"thumbnail": "https://books.google.com/books/publisher/content/images/frontcover/uOV4EAAAQBAJ?fife=w176-h264"

},

... other books

]

}

},

{

"title": "New releases",

"subtitle": null,

"items": [

{

"title": "Dark Whisper",

"link": "https://play.google.com/store/books/details/Christine_Feehan_Dark_Whisper?id=XDlcEAAAQBAJ",

"extension": "Book 36",

"rating": 4.7,

"price": "$14.99",

"extracted_price": 14.99,

"thumbnail": "https://books.google.com/books/publisher/content/images/frontcover/XDlcEAAAQBAJ?fife=w512-h512"

},

... other books

]

},

... other sections

{

"title": "New to rent",

"subtitle": null,

"items": [

{

"title": "The Forever War",

"link": "https://play.google.com/store/books/details/Joe_Haldeman_The_Forever_War?id=SUFOBQAAQBAJ",

"extension": null,

"rating": 4.5,

"price": "$2.69",

"extracted_price": 2.69,

"thumbnail": "https://books.google.com/books/publisher/content/images/frontcover/SUFOBQAAQBAJ?fife=w512-h512"

},

... other books

]

}

]

Using Google Play Books Store API from SerpApi

This section is to show the comparison between the DIY solution and our solution.

The main difference is that it's a quicker approach. Google Play Books Store API will bypass blocks from search engines and you don't have to create the parser from scratch and maintain it.

First, we need to install google-search-results:

pip install google-search-results

Import the necessary libraries for work:

from serpapi import GoogleSearch

import os, json

Next, we write a search query and the necessary parameters for making a request:

params = {

# https://docs.python.org/3/library/os.html#os.getenv

'api_key': os.getenv('API_KEY'), # your serpapi api

'engine': 'google_play', # SerpApi search engine

'store': 'books', # Google Play Books

'books_category': None # category. List of all books categories: https://serpapi.com/google-play-books-categories

}

We then create a search object where the data is retrieved from the SerpApi backend. In the result_dict dictionary we get data from JSON:

search = GoogleSearch(params)

result_dict = search.get_dict()

The data is retrieved quite simply, we just need to call the corresponding key. All sections with required data are in the 'organic_results' key, so you need to iterate over them. For each section, we create a dictionary structure that will contain such data as the title, subtitle and items section.

Some sections are missing a subtitle. Therefore, the dict.get() method was used to get it, which by default returns None if there is no data. This will look much better than exception handling which is also used to prevent errors but makes the code less readable and more cumbersome:

google_play_books = []

for result in result_dict['organic_results']:

section = {}

section['title'] = result['title']

section['subtitle'] = result.get('subtitle')

section['items'] = []

📌Note: In the near future, None values will be processed on the backend and it will not be necessary to perform the above manipulations to obtain data that may not be there.

The 'items' key contains data about each book in this section. Therefore, it also needs to be iterated in a loop. To get the data, you need to refer to the corresponding key. Sometimes some data is missing. Therefore, in such cases, the dict.get() method is used:

for item in result['items']:

section['items'].append({

'title': item['title'],

'link': item['link'],

'product_id': item['product_id'],

'serpapi_link': item['serpapi_link'],

'rating': item.get('rating'),

'extension': item.get('extension'),

'original_price': item.get('original_price'),

'extracted_original_price': item.get('extracted_original_price'),

'price': item['price'],

'extracted_price': item['extracted_price'],

'video': item.get('video'),

'thumbnail': item['thumbnail'],

})

The dict.get(keyname, value) method can be used to retrieve all data from a dictionary, but I decided to demonstrate how you can retrieve data if there is none.

Example code to integrate:

from serpapi import GoogleSearch

import os, json

params = {

# https://docs.python.org/3/library/os.html#os.getenv

'api_key': os.getenv('API_KEY'), # your serpapi api

'engine': 'google_play', # SerpApi search engine

'store': 'books', # Google Play Books

'books_category': None # category. List of all books categories: https://serpapi.com/google-play-books-categories

}

search = GoogleSearch(params) # where data extraction happens on the SerpApi backend

result_dict = search.get_dict() # JSON -> Python dict

google_play_books = []

for result in result_dict['organic_results']:

section = {}

section['title'] = result['title']

section['subtitle'] = result.get('subtitle')

section['items'] = []

for item in result['items']:

section['items'].append({

'title': item['title'],

'link': item['link'],

'product_id': item['product_id'],

'serpapi_link': item['serpapi_link'],

'rating': item.get('rating'),

'extension': item.get('extension'),

'original_price': item.get('original_price'),

'extracted_original_price': item.get('extracted_original_price'),

'price': item['price'],

'extracted_price': item['extracted_price'],

'video': item.get('video'),

'thumbnail': item['thumbnail'],

})

google_play_books.append(section)

print(json.dumps(google_play_books, indent=2, ensure_ascii=False))

Output:

[

{

"title": "New releases",

"subtitle": null,

"items": [

{

"title": "Dark Whisper",

"link": "https://play.google.com/store/books/details/Christine_Feehan_Dark_Whisper?id=XDlcEAAAQBAJ",

"product_id": "XDlcEAAAQBAJ",

"serpapi_link": "https://serpapi.com/search.json?engine=google_play_product&gl=us&hl=en&product_id=XDlcEAAAQBAJ&store=books",

"rating": 4.6,

"extansion": null,

"original_price": null,

"extracted_original_price": null,

"price": "$14.99",

"extracted_price": 14.99,

"video": null,

"thumbnail": "https://books.google.com/books/publisher/content/images/frontcover/XDlcEAAAQBAJ?fife=w256-h256"

},

... other items

{

"title": "Santa's Little Yelpers: An Andy Carpenter Mystery",

"link": "https://play.google.com/store/books/details/David_Rosenfelt_Santa_s_Little_Yelpers?id=TnpVEAAAQBAJ",

"product_id": "TnpVEAAAQBAJ",

"serpapi_link": "https://serpapi.com/search.json?engine=google_play_product&gl=us&hl=en&product_id=TnpVEAAAQBAJ&store=books",

"rating": 5.0,

"extansion": null,

"original_price": null,

"extracted_original_price": null,

"price": "$13.99",

"extracted_price": 13.99,

"video": null,

"thumbnail": "https://books.google.com/books/publisher/content/images/frontcover/TnpVEAAAQBAJ?fife=w256-h256"

}

]

},

... other sections

{

"title": "Advice for a better life",

"subtitle": null,

"items": [

{

"title": "Atomic Habits: An Easy & Proven Way to Build Good Habits & Break Bad Ones",

"link": "https://play.google.com/store/books/details/James_Clear_Atomic_Habits?id=lFhbDwAAQBAJ",

"product_id": "lFhbDwAAQBAJ",

"serpapi_link": "https://serpapi.com/search.json?engine=google_play_product&gl=us&hl=en&product_id=lFhbDwAAQBAJ&store=books",

"rating": 4.6,

"extansion": null,

"original_price": null,

"extracted_original_price": null,

"price": "$9.99",

"extracted_price": 9.99,

"video": null,

"thumbnail": "https://books.google.com/books/publisher/content/images/frontcover/lFhbDwAAQBAJ?fife=w256-h256"

},

... other items

{

"title": "What Happened to You?: Conversations on Trauma, Resilience, and Healing",

"link": "https://play.google.com/store/books/details/Oprah_Winfrey_What_Happened_to_You?id=_BreDwAAQBAJ",

"product_id": "_BreDwAAQBAJ",

"serpapi_link": "https://serpapi.com/search.json?engine=google_play_product&gl=us&hl=en&product_id=_BreDwAAQBAJ&store=books",

"rating": 4.5,

"extansion": null,

"original_price": null,

"extracted_original_price": null,

"price": "$14.99",

"extracted_price": 14.99,

"video": null,

"thumbnail": "https://books.google.com/books/publisher/content/images/frontcover/_BreDwAAQBAJ?fife=w256-h256"

}

]

}

]

Links

Add a Feature Request💫 or a Bug🐞

Top comments (0)